Dual space (linear algebra)

Contents |

In linear algebra, the dual V∗ of a finite-dimensional vector space V is the vector space of linear functionals (also known as one-forms) on V. Both spaces, V and V∗, have the same dimension. If V is equipped with an inner product, V and V∗ are naturally isomorphic, which means that there exists a one-to-one correspondence between the two spaces that is defined without use of bases. (This isomorphism does not generally exist for infinite-dimensional inner product spaces).

The importance of a dual vector space arises in tensor calculus from the fact that elements of V and of V∗ transform contragrediently. One expresses this by stating that mixed tensors have contravariant components in the dual space V∗ and covariant components in the original space V. Covariant and contravariant components can be contracted to tensors of lower rank.

In crystallography and solid state physics the dual space of ℝ3 is often referred to as reciprocal space.

[edit] Definition

First an arbitrary finite-dimensional vector space V over the field ℝ of real numbers is considered that is not necessarily equipped with a norm or inner product. The dual linear space V∗ of V is distinct from V. The linear space V∗ consisting of linear functionals on V, the latter are introduced first.

A functional α on V is the map

The functional α is linear if

From linearity follows that any α maps the zero element of V onto the number 0∈ℝ.

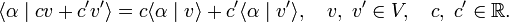

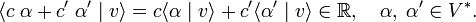

If distributivity is postulated, the space V∗ of linear functionals is a vector space; that is, with α and α′ also cα + c′α′ is a linear functional,

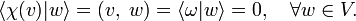

The zero vector of V∗ is the linear functional that maps every element of V onto 0 ∈ ℝ; this zero functional is written as ω.

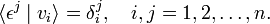

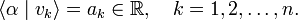

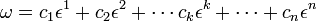

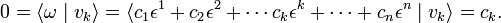

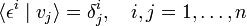

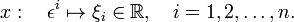

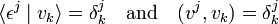

The dimensions of a vector space and its dual are equal. In order to show this, we let v1, v2,..., vn be a basis of V. Any element of V can be uniquely expanded in terms of these n basis elements. Further, when the effect of an arbitrary linear functional α ∈ V∗ on the n basis elements of V is given, the effect of α on any element of V is determined uniquely; this follows from the linearity of α. Consider now the n linear functionals εj defined by

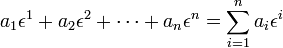

where δji is the Kronecker delta (unity if i = j, 0 otherwise). Any linear functional can be expressed as a linear combination of the εj. Indeed, let an arbitrary linear functional α be given by

The functional

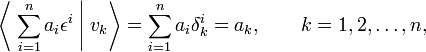

gives obviously the same effect as α on the basis

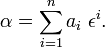

so that the arbitrary linear functional α is equal to the linear combination

Further the εj are linearly independent. That is, if

then ck = 0 for all k=1, ...,n. Consider the coefficient ck (k arbitrary with 1≤ k ≤ n) and

Hence in the expansion of the zero vector ω all expansion coefficients are zero, and it follows that the elements {εj | j=1,2,..., n} are linearly independent. Therefore, the εj are a basis of V∗ and dim V∗ = dim V = n.

One calls the bases v1, v2, ..., vn and ε1, ε2, ..., εn with

biorthogonal (or sometimes dual).

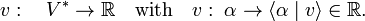

[edit] The dual of the dual

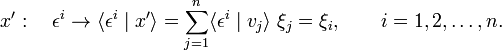

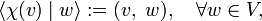

Since the linear space V above was arbitrary, the dual of any finite-dimensional linear space may be defined. A legitimate question is: what is the dual of V∗? The answer is: V. In order to see this, we interpret v ∈ V as a mapping of α onto an element of ℝ,

As a mapping v acts to the left in the braket ⟨..|..⟩. If so wished, one could let act v to the right by defining

- ⟨ v | α⟩ := ⟨ α | v ⟩,

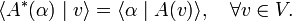

but this definition is not necessary and will not be introduced. In any case v is a functional on V∗. It is a linear functional because (v acts to the left),

The domain of v is all of V∗, because by definition V∗ contains all linear functionals on V. If there is a linear functional β such that ⟨ β | v ⟩ ∈ ℝ, the functional β is by definition in V∗ and the action of v on β is defined, i.e., β is in the domain of v. Conversely, suppose that x is an arbitrary linear functional on V∗ with

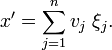

Consider the element x′ ∈ V with the components ξi

Because of distributivity, the functional x′ maps the basis of V∗thus,

Hence x′ = x, and it follows that V contains all linear functionals on V∗. Conclusion: V∗∗ (the space of all linear functionals on V∗) is equal to V. The pair

is symmetric: α (∈ V∗) maps v and v (∈ V and ∈ V∗∗) maps α onto the same real number. It is emphasized that the natural isomorphism between V and V∗∗ is defined without need for an inner product or basis on V.

[edit] Dual transformations

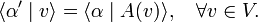

Let A be an arbitrary endomorphism of V (linear map V → V). Let α ∈ V∗ and let α′ be the linear functional,

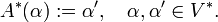

Define the dual transformation A∗: V∗ → V∗ of A by

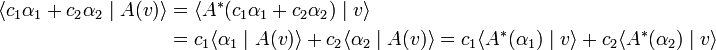

It is easy to show that A∗ is linear. Indeed,

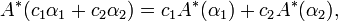

and since this holds for all v it can be concluded that

so that A∗ is linear (is an endomorphism of V∗). A mnemonic is: the transformation A∗ is defined by the "turnover rule":

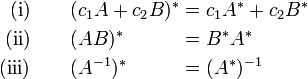

The dual of the identity E on V is the identity on V∗; the zero map on V has as dual the zero map on V∗. Let A and B be linear maps of V into itself, then

Property (i) is self-evident. Property(ii) follows thus,

which is true for any v and α. Property (iii) is only valid if the inverse A−1 exists, it then states that the inverse of the dual also exists. This property follows immediately from (ii) (take B = A−1) and the fact that the dual of the identity on V is the identity on V∗.

[edit] Matrices

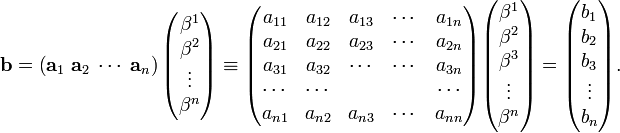

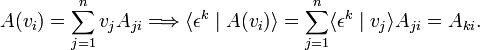

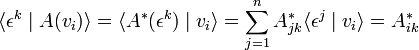

The transformations A and A∗ being linear, they are each in 1-1 correspondence with a matrix, once a basis of the respective space has been chosen. Write:

On the other hand,

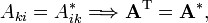

so that

where the superscript T stands for matrix transposition. The matrix of A∗ is equal to the transpose of the matrix of A.

[edit] Basis transformation

If one transforms simultaneously biorthogonal bases of V and V∗ to new bases that are again biorthogonal, the matrices effecting the transformation are contragredient to each other (transpose and inverse).

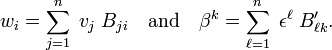

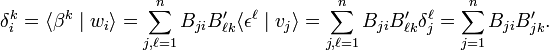

Write, in order to show this, the new bases in terms of the old for V and V∗, respectively,

The matrices B and B′ are invertible (they map bases onto bases). The new and the old bases are biorthogonal

As matrices:

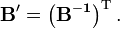

where I is the n×n identity matrix with general element δ ki. In conclusion,

[edit] Inner product spaces

Let an inner product (v, w) on V be given. The inner product is the bilinear function on the Cartesian product:

It is assumed that the inner product is symmetric: (v, w) = (w, v) and non-degenerate: (w, v) = 0 for all w ∈ V if and only if v = 0. It is not assumed that the inner product is definite, i.e., it is possible that (v, v) = 0 while v ≠ 0.

The natural (independent of basis) linear map χ: V → V∗ defined for a given v ∈ V by:

is a vector space isomorphism between V and V∗. In the first place, as was shown earlier, dim V = dim V∗. Secondly χ is invertible. Namely, suppose that v belongs to the kernel of the linear map χ, that is, χ(v) = ω, the null functional. Then

From the non-degeneracy of the inner product it follows that v = 0: the kernel of χ contains only the zero vector. So, χ is one-to-one with a domain and range that cover the respective spaces, and thus χ is a vector space isomorphism. Since χ is defined without bases, the two spaces are naturally isomorphic. In conclusion: it is possible to identify V∗ with V when V has a non-degenerate inner product.

An example of such identification is the vector product a×b of two vectors in ℝ3. One can define this product as proportional to the wedge product (antisymmetric tensor)  , and the space of wedge products as a dual space of ℝ3 (see the example below). More commonly one considers the vector product a×b as an element of ℝ3—one thus identifies

, and the space of wedge products as a dual space of ℝ3 (see the example below). More commonly one considers the vector product a×b as an element of ℝ3—one thus identifies  with ℝ3.

with ℝ3.

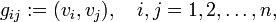

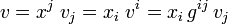

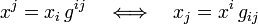

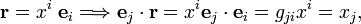

A biorthogonal (dual) basis may be defined within the space V. An upper/lower (also known as contravariant/covariant) index notation is convenient together with the Einstein summation convention[1] that states that a summation is implied when in a product the same index appears twice. The metric (or fundamental) tensor g is needed,

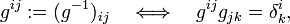

where {vi } is a basis of V. Because the inner product is symmetric and non-degenerate, the tensor g is symmetric and invertible.[2] Introduce a notation for the inverse of g,

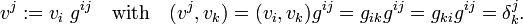

where δ ik is the Kronecker delta. (The use of upper indices on g−1 and vectors implies contravariance of these indices; this is indeed the case, see tensor). Define now a biorthogonal basis of V by raising indices of the basis elements vi, and recall that g is non-singular, so that the set {v j} is linearly independent of dimension n and accordingly a basis of V,

Writing the basis of V∗ biorthogonal to {vi } as {εj } and comparing the following two expressions

one sees a close correspondence between the two bases biorthogonal to { vk }. The bases belong to different spaces, but because they are so closely connected, it is often stated that "linear combinations of the basis { v i } belong to the dual of V." Strictly speaking one should include the isomorphism χ: v i → ε i, but the appearance of χ obscures the notation and is therefore omitted. Conversely, when it is stated that x i ε i belongs to V one should make the (mental) substitution ε i → v i in this expression.

If one writes

then it follows that

which shows that the inverse g−1 of the fundamental (metric) tensor transforms the covariant component xi into the contravariant component xj (raises the index) of the same vector v ∈ V, and vice versa: g lowers the index of a vector component.

[edit] Example

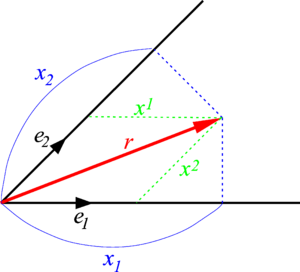

In a small change of notation, one may write

where {ek } is a non-orthogonal basis of V, the centered dot stands for an inner product and r ∈ V. The relation between the contravariant components xk and the contravariant components xk are shown in the figure for V = ℝ2.

[edit] Application: reciprocal space

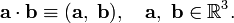

Let us consider V = ℝ3 with the usual positive definite inner product written as a centered dot

The triple product plays an important role

where the × stand for a vector product. The quantity between square brackets is a determinant (a three-fold wedge product). The determinant vanishes in case of linear dependence

In particular, the determinant vanishes if a vector appears at least twice in the determinant.

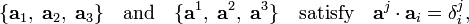

Consider a non-orthogonal, non-normalized basis a1, a2, a3 of ℝ3. Define vectors

then, for instance,

The bases

and hence are biorthogonal (dual, reciprocal). Strictly speaking, the dual space ℝ3∗ is spanned by the normalized vector products a j and the original space ℝ3 by the non-orthononormal a j. But, as is usual for vector products, the biorthogonal basis is assumed to belong to ℝ3.

This example can be generalized to an n-dimensional space V by letting the dual space be spanned by "one-hole vectors". The triple wedge product becomes the n-fold wedge product (determinant):

(In physics this is known as a "Fermi vacuum", the wedge product contains as many vectors as the dimension of the space. It is also known as Slater determinant.) By removing one vector one defines a "one-hole vector", for instance the nth one,

The "one-hole vectors" are linear functionals acting on the "one-particle vectors". Take the "one-particle vector" b,

Clearly, the dual space V∗ spanned by the "one-hole vectors" is distinct from V. Yet, as the case for n = 3 shows, the two spaces can be identified in a natural manner.

Finally one gets the following expression for the contravariant components of vectors (summation convention!),

One recognizes this as Cramer's rule for the solution of {βk, k=1,2,...,n} from the simultaneous linear equations

[edit] Notes

- ↑ A. Einstein, Die Grundlage der allgemeinen Relativitätstheorie [The foundation of the general theory of relativity], Annalen der Physik, Vierte Folge, vol. 49, pp. 769–822 (1916). Downloadable pdf

- ↑ If the tensor g were singular, its kernel would be non-zero, i.e., there would be a non-zero n-tuple k = (k1, k2, ..., kn) such that

![[ \mathbf{a}\;\mathbf{b}\;\mathbf{c}] := (\mathbf{a}\times\mathbf{b})\cdot\mathbf{c}](../w/images/math/7/8/1/781323b5f9257ae4d3a9e15fc0c4e543.png)

![[ \mathbf{a}\;\mathbf{b}\;\mathbf{c}] = 0

\quad\Longleftrightarrow\quad\hbox{if}\; \{\mathbf{a},\;\mathbf{b},\;\mathbf{c} \}

\;\;\hbox{linearly dependent}.](../w/images/math/0/5/9/0599edc49709e7f40a2f555d3f86c9c5.png)

![\begin{align}

\mathbf{a}^1 &:= \frac{\mathbf{a}_2\times\mathbf{a}_3}{[ \mathbf{a}_1\;\mathbf{a}_2\;\mathbf{a}_3]} \\

\mathbf{a}^2 &:= \frac{\mathbf{a}_3\times\mathbf{a}_1}{[ \mathbf{a}_1\;\mathbf{a}_2\;\mathbf{a}_3]} \\

\mathbf{a}^3 &:= \frac{\mathbf{a}_1\times\mathbf{a}_2}{[ \mathbf{a}_1\;\mathbf{a}_2\;\mathbf{a}_3]} \\

\end{align}](../w/images/math/4/b/3/4b37eb77eac312e558a3cc609c404a7a.png)

![\mathbf{a}^3 \cdot \mathbf{a}_k

= \left(\frac{ \mathbf{a}_1\times\mathbf{a}_2 }

{[ \mathbf{a}_1\;\mathbf{a}_2\;\mathbf{a}_3] } \right) \cdot\mathbf{a}_k

=\frac{[\mathbf{a}_1\;\mathbf{a}_2\;\mathbf{a}_k]}

{[\mathbf{a}_1\;\mathbf{a}_2\;\mathbf{a}_3]} = \delta^3_k, \quad k=1,2,3.](../w/images/math/9/f/0/9f011aa2b90c862292873bc37d8ad81e.png)

![[\mathbf{a}_1\; \mathbf{a}_2\;\cdots\; \mathbf{a}_n ]](../w/images/math/8/4/c/84cfac621c3bdaaa47de28a0605ad671.png)

![\mathbf{a}^n := \frac{[\mathbf{a}_1\; \mathbf{a}_2\;\cdots\; \mathbf{a}_{n-1} ]} {[\mathbf{a}_1\; \mathbf{a}_2\;\cdots\; \mathbf{a}_n ]}.](../w/images/math/6/b/9/6b9c4a69870c9067506293cecef42bfa.png)

![\langle \mathbf{a}^k \mid \mathbf{b} \rangle

:= \frac{[\mathbf{a}_1\; \mathbf{a}_2\;\cdots\;\mathbf{a}_{k-1}\;\mathbf{b}\;\mathbf{a}_{k+1}\;\cdots \mathbf{a}_{n} ]} {[\mathbf{a}_1\; \mathbf{a}_2\;\cdots\; \mathbf{a}_n ]},\quad \mathbf{b} \in V.](../w/images/math/1/a/4/1a46dcbf1df92a85d48571b3c9f0ca0c.png)