Pi (mathematical constant)/Proofs/Proof that Pi is irrational

Although the mathematical constant known as π (pi) has been studied since ancient times, it was not until the 18th century that it was proved to be an irrational number.

In the 20th century, proofs were found that require no prerequisite knowledge beyond integral calculus. One of those is due to Ivan Niven, another to Mary Cartwright (reproduced in Jeffreys).

[edit] Niven's proof

Like all proofs of irrationality, the argument proceeds by reductio ad absurdum. Suppose π is rational, i.e. π = a / b for some integers a and b, which may be taken without loss of generality to be positive.

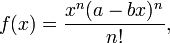

Given any positive integer n we can define functions f and F as follows:

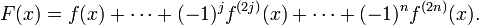

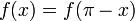

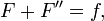

Then f is a polynomial function each of whose coefficients is 1/n! times an integer. It satisfies the identity

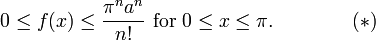

and the inequality

Observe that for 0 ≤ j < n, we have

- f(j)(0) = f(j)(π) = 0.

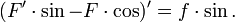

For j ≥ n, f (j)(0) and f (j)(π) are integers. Consequently F(0) and F(π) are integers. Next, observe that

and hence

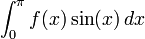

It follows that

is a positive integer. But by the inequality (*), the integral approaches 0 as n approaches infinity, and that is impossible for a sequence of positive integers.

[edit] References

- Ivan Niven, "A Simple Proof that π is Irrational", Bull.Amer.Math.Soc. v. 53, p. 509, (1947)

- Harold Jeffreys, Scientific Inference, 3rd edition, Cambridge University Press, 1973.