Covariance

The covariance — usually denoted as Cov — is a statistical parameter used to compare

two real random variables on the same sample space (more precisely, the same probability space).

It is defined as the expectation (or mean value)

of the product of the deviations (from their respective mean values)

of the two variables.

The sign of the covariance indicates a linear trend between the two variables.

- If one variable increases (in the mean) with the other, then the covariance is positive.

- It is negative if one variable tends to decrease when the other increases.

- If it is 0 then there is no linear correlation between the two variables.

In particular, this is the case for stochastically independent variables. But the inverse is not true because there may still be other – nonlinear – dependencies.

The value of the covariance is scale-dependent and therefore does not show how strong the correlation is. For this purpose a normed version of the covariance is used — the correlation coefficient which is independent of scale.

[edit] Formal definition

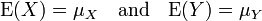

The covariance of two real random variables X and Y with expectation (mean value)

is defined by

Remark:

If the two random variables are the same then

their covariance is equal to the variance of the single variable: Cov(X,X) = Var(X).

In a more general context of probability theory the covariance is a second-order central moment of the two-dimensional random variable (X,Y), often denoted as μ11.

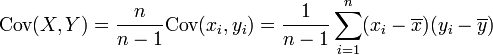

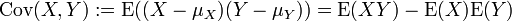

[edit] Finite data

For a finite set of data

the covariance is given by

or, using a convenient notation

introduced by Gauss, by

This is equivalent to taking the uniform distribution where each item (xi,yi) has probability 1/n.

[edit] Unbiased estimate

The expectation of the covariance of a random sample — taken from a probability distribution — depends on the size n of the sample and is slightly smaller than the covariance of the distribution.

An unbiased estimate of the covariance is

Remark:

The distinction between the covariance of a sample and

the estimated covariance of the distribution

is not always clearly made.

This explains why one finds both formulae for the covariance

— that taking the mean with " 1 / n " and that with " 1 / (n-1) " instead.

[edit] Properties

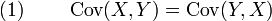

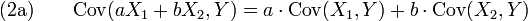

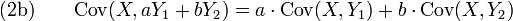

The covariance is

- (1) symmetric

- (2) bilinear

- (3) positive definite

because the following holds:

Since the covariance cannot distinguish between random variables X1 and X2 that have the same deviation, (i.e., X1 − E(X1) = X2 − E(X2) holds almost surely) it does not define an inner product for random variables, but only for random variables with mean 0 or, equivalently, for the deviations.

| |

Some content on this page may previously have appeared on Citizendium. |

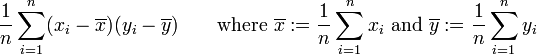

![[a_i] := \sum_{i=1}^n a_i](../w/images/math/e/8/9/e89b0ad08dd5dcd645ff1acc06ff61df.png)

![{1\over n}( [ x_i y_i ] - [x_i][y_i] )](../w/images/math/5/a/9/5a95f5bd9bc3336364b49b4424967902.png)